As I’ve made abundantly clear to a lot of people over the years, we should all have been paying more attention to Helen Keller.

Okay, maybe I should clarify that somewhat.

If you were to start talking about Helen Keller to the proverbial person-in-the-street then there are certain touchstones of knowledge that you’ll see come into play. Some people will have no idea who Helen Keller is – which I get, because she’s a much bigger deal in the USA than anywhere else, so for those folks I’d mention the whole being-born-deaf-and-blind thing (which is what most people customarily jump to), as well as the whole Socialism thing (which is an association that a significantly fewer number of people make), but those are kind of table stakes. They’re showy and textbook inspirational/surprising, but differently-abled socialists aren’t uniquely unknown.

No, the thing I’d really draw attention to was her incisive grasp of the nuances of late twentieth and early twenty-first century Information Security, which were as gimlet-sharp as they were eerily predictive – the latter being quite a feat considering that she died in 1968.

What I’m referring to – of course – is this quote:

“Security is mostly a superstition. It does not exist in nature, nor do the children of men as a whole experience it. Avoiding danger is no safer in the long run than outright exposure.”

Helen Keller – “The Open Door”

Now, I’ve used this quote in a lot of talks in a lot of hotel conference rooms near a lot of airports over the years, and once this current plague is over I hope to use it in a lot more, because it’s something that’s absolutely worth absorbing and I like the weird little swag bags you sometimes get when you’re a speaker at a conference because I never seem to have enough pens and novelty iPhone chargers. Pursuing absolute security is like blundering into your nearest National Park, blindly hoping to bump into a Unicorn; no matter what your intentions you’re going to end up cold and tired and wet and disappointed.

Security doesn’t exist. It’s a mental and conceptual model that we’ve created so that we can sleep at night, and nothing more. You are not safe from lightning strikes on clear summer days. You can be as cautious and careful as possible and be rigorous in your use of PPE and distancing and still get COVID-19. A meteor could crash through your house while you sleep. Terrible, unexplained, fatal things happen to people all over the world on a daily basis; sure, sometimes the odds are fantastically slim, but you’re still playing a game with those odds.

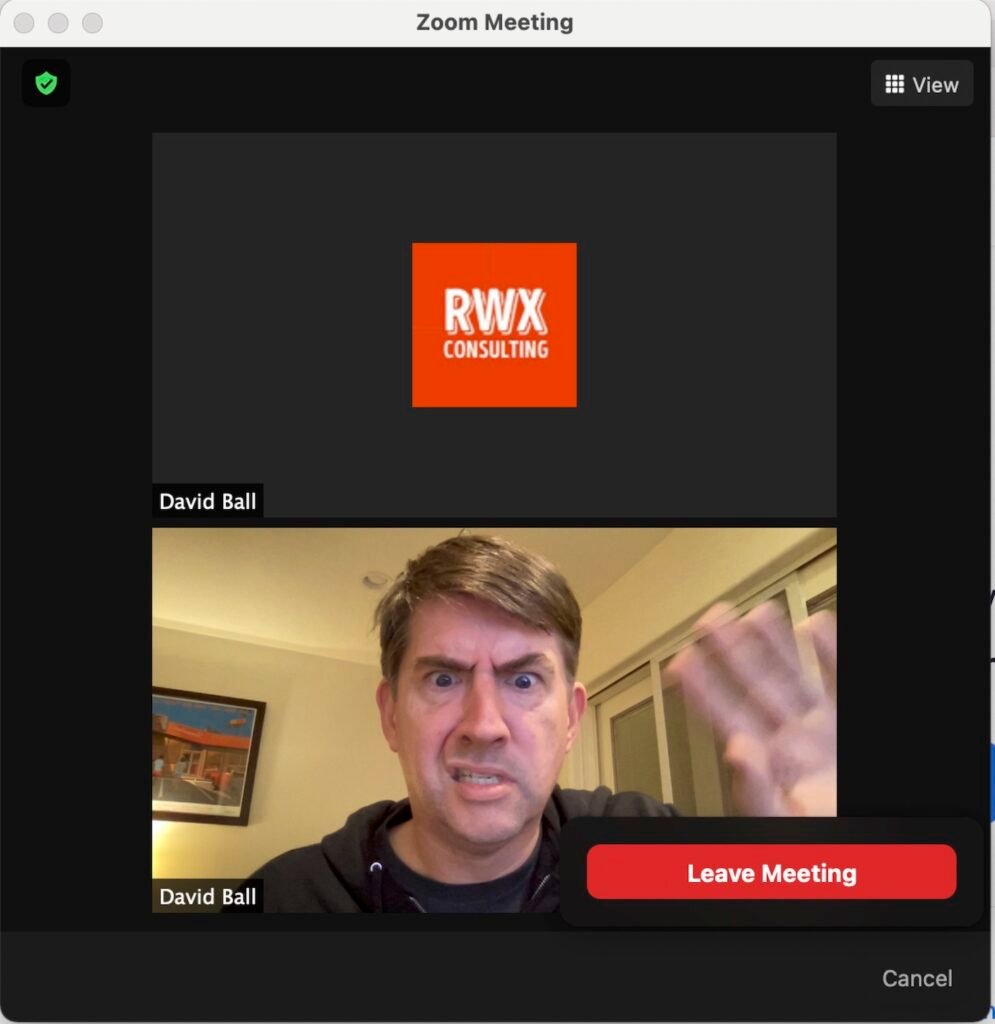

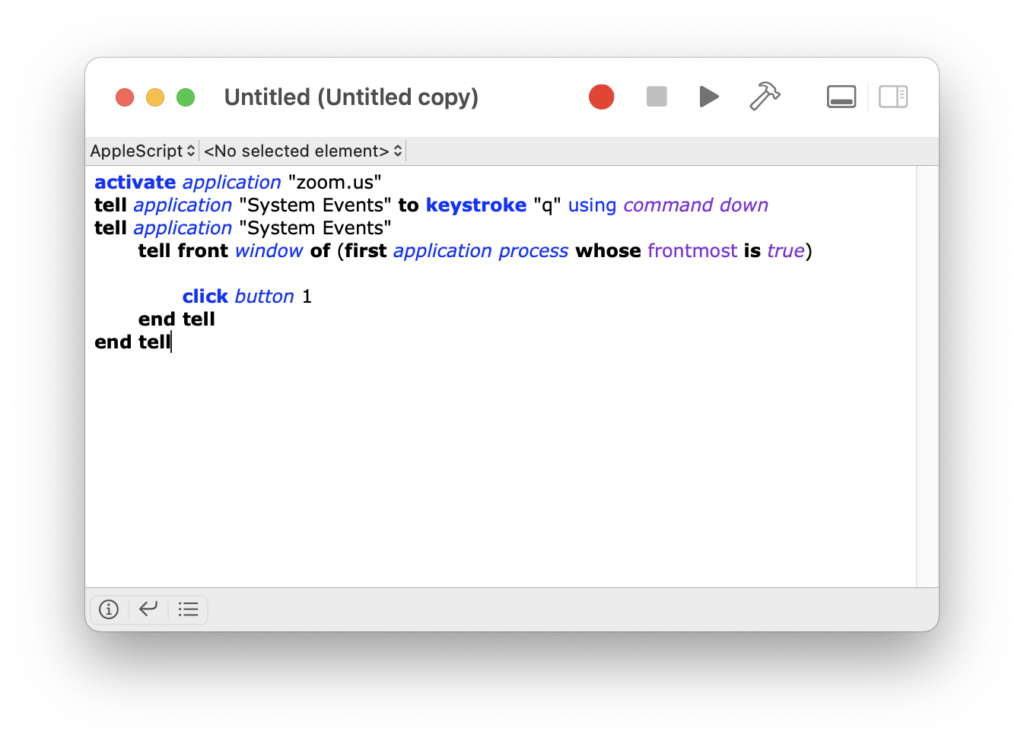

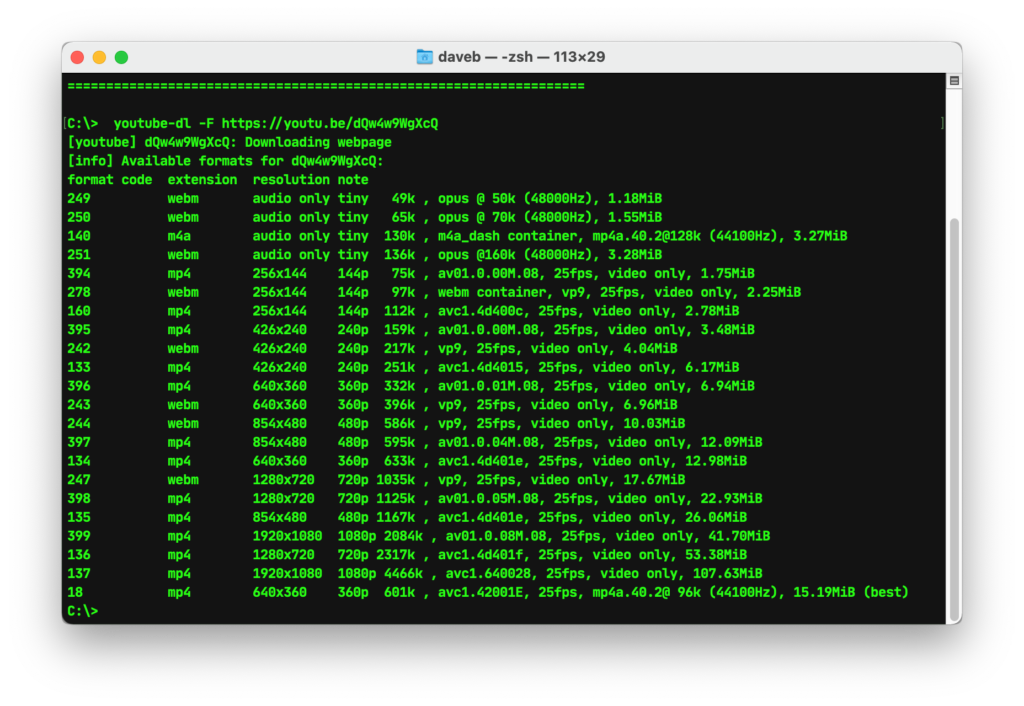

It’s usually after making that point that I bring up the next slide in the deck, which looks a little something like this:

When we talk about “security” what we’re really talking about is “the mitigation of risk.”

This is an unpleasant truth, and when I address it in front of the aforementioned crowds in the aforementioned hotel conference rooms I can usually see the audience do one of two things; dutifully nod and go back to screwing around on Facebook (which is what most conference attendees – whose presence is mandated by their bosses – do anyway), or actually start to pay attention (which, as a person standing on a stage who spent two hours the night before rehearsing in the bathroom mirror, is something I heartily approve of).

This, in an admittedly roundabout fashion, brings us around to this story. If you’re disinclined to go and follow and read that link then I’ll lay out the broad strokes thus: during the current imbroglio that is the SolarWinds investigations another security firm (CrowdStrike) reported that Russian hackers had used compromised access to the vendor that sold it Microsoft Office 365 licenses in order to attempt to harvest emails that – because of the nature of CrowdStrike as a security company – would probably have contained privileged information.

Apple people traditionally like to throw shade at PC people, and as an Apple person I hate being lumped in with that crowd. Talking trash about a company just because you think that its products are inferior to the products of the company that you prefer doesn’t make you right, or sophisticated, or some arbiter of taste. It means that you have an opinion – which is fine – and that your opinion is something that you can’t keep to yourself – which isn’t, and which in turn makes you an asshole. I don’t want to court controversy here, but I’d venture that not being an asshole is a low bar that everyone should really try and clear, or at least strive to.

So, with that in mind you have to step back and consider this story with a little distance. Sure, this sounds bad – and it is bad – but fair’s fair; CrowdStrike have been forthcoming about the attempted breach, and while it’s fun to sling mud and hand out blame there’s really no fault in their actions – nor is there any in the actions of Microsoft (without more information on the nature of the breach on the vendor’s side there’s little value in making accusations and throwing accountability around, but my hunch is that if we’re hearing about all of this then they’ve probably done the smart thing and been transparent about the issue too.)

The problem here was not Microsoft, nor the vendor, nor CrowdStrike. Giving all of them the benefit of the doubt they may have acted perfectly. No, the problem is that if the model you’ve created to ensure your organizational security isn’t correct then no matter how well that model is implemented it’s always going to be subject to compromise. Keller’s maxim is universal. Security is superstition.

This article isn’t really about the Microsofts and CrowdStrikes of the world; I can’t speak to that scale of company because I’m an independent IT consultant in a coastal SoCal town, and because I rarely actually bump into that kind of setup. Amazon and Raytheon and the handful of larger enterprises that have facilities around here aren’t my clients, because they’re dealing with issues of size and complexity that entail a full-time staff of in-house dedicated IT support. I’ve been that in-house guy before, and I’m very happy that those clients aren’t in my base (because I like to take weekends off and I like to sleep nights and because being on call 24/7/365 is exhausting). But there are lessons to be taken here that can be applied to smaller-scale organizations. So:

It’s your data.

Passwords, certificates, login credentials – they’re your data. They don’t belong to anyone else, and they shouldn’t be given to anyone else. Not third-party vendors, not indiscriminately handed out to employees. Not even given to IT consultants.

I don’t keep passwords, because it’s a terrible business practice. Leaving aside the blatantly horrifying liability issues, I firmly believe that clients have the right to fire their IT consultants (and vice versa). I’m fortunate in that I’ve only been fired by one client, and in that case it was less of a firing than a mutual parting of ways (after all, if you’re moving from an all-macOS Server infrastructure to an all-Windows Server infrastructure then there’s relatively little point in keeping the Apple consultant around when the Windows consultant is right there in the mix). On the other hand, I’ve fired a handful of clients over the years, and having both parties able to walk away amicably and secure in the knowledge that nobody owes anybody anything makes that process easier.

Good IT consulting outfits don’t retain your data. If you forget your password and call your IT person, and they can look it up for you in their records then you should fire that IT person immediately and then change all of your passwords – and I mean all of them. The offending IT person can be of the stoutest character and unimpeachable ethical standards, but if they have your data then they’re a threat because if a third party can get to their data then that third party also owns yours. There’s little point investing in locks and alarm systems if the person who maintains the locks and alarms leaves your keys and codes lying around their office for their cleaning staff to see.

Your data doesn’t just live on your computer.

It’s not just about the services and credentials that you use inside your organization; it’s also about the services and credentials that reside outside your organization. Some of the most critical things that effect your ability to do business are some of the most-often overlooked – a prime example is DNS.

DNS – and this is an immensely stripped down explanation so don’t shoot me – is the mechanism by which the internet knows where computers and servers actually are and what they do. DNS servers tell the world where your website is, and where your email server resides. Unless you’re hosting your own DNS server (which is thankfully a rarer occurrence these days) then your DNS host has the power to – deliberately or not – completely cut off and isolate your organization from the internet.

This sounds like a worst-case scenario, but I’ve seen this a lot more than I’d like; organizations that let third-parties administer their DNS without giving any control to the organization. If I had a nickel for every time I’ve asked a client if they have any documentation or information on where their DNS is hosted and then had nothing in return but a blank, panicked stare then I’d have… I don’t know. A lot of nickels.

And again, that’s understandable. The structural mechanics of How The Internet Words are a conceptual handful, and there’s no practical need for most people to stay on top of that as a matter of course. But there is a need to have that information if needed.

Have a secure repository for your data.

Yes, yes, I know; “Security is mostly a superstition” and so forth. That’s a given, but the rest of the Keller quote – part that I don’t generally like to include in the talks at the conferences in the hotels near the airports runs as follows: “Life is either a daring adventure, or nothing.” It’s easy to take that as a carefree expression of the vital need to embrace a zest for life, but I look at it as something more chilling. “Daring adventures” are a hell of a lot better than doing nothing, after all. It’s better to have a carefully thought-through and protected repository for your data than it is to write it down in a book and put it on your desk, or throw everything in a Filemaker database or spreadsheet on your server marked “Passwords”.

(Note: Those are actual examples of things I’ve moved actual people away from doing.)

Take some time and find the right tool for documentation. Something cloud-based would be good; better yet, something with a lot of redundancy and good encryption options. I like IT Glue, but that’s just a personal preference. If you’re at an appropriate scale then look into having something written for you by a decent web/database person – there are options to explore in this space. Just don’t blindly either put everything into one bucket that lives on your computer (which can be stolen/damaged/hacked/just decide to die one day) or equally blindly go and throw it all onto a Google Workspace or Office 365 document.

Know where your keys are.

I don’t mean “keys” in the PKI sense (well, okay, maybe I do, but that’s not where I’m going with this) – I mean the keys to the things that run your business. I’ve already mentioned DNS, but there’s also Domain Registration. Do you know where your domain is registered? Whose account was used for the registration? When it expires? What about organizational Apple IDs used to administer Apple Business Manager, or APNS? What about software licenses? How are you tracking that data? Whose account was used to purchase those, and from what vendor?

That’s a lot of questions – I apologize. But not much; it’s a regrettable truth that when your job often involves going into organizations experiencing systemic trouble then you tend to only see the worst case scenarios, and in those kinds of cases it’s not uncommon to discover that the absolute critical piece of information or credential is locked behind a defunct email address, or originally set up sans documentation by a former employee, or more often than not just missing in action without a trace or a clue.

There’s nothing that can’t be fixed (well, very little that can’t be fixed), but some fixes are well-documented and quickly squared away because there’s a clear chain of information, and other fixes can take literally days of complete downtime and mountains of billable hours. Don’t get me wrong; I enjoy billable hours – I just don’t particularly enjoy writing them for reasons that could have easily been averted.

Make sure that you’re being diligent in how you implement products and services, and that there are established procedures for how those are accessed and serviced. Apple recommends having a specific Apple ID for organizations just for administering Volume Purchasing/MDM, but I’d go further and suggest setting up a specific administrative account that’s used as the contact for everything else – web, DNS, registration, licensing, the whole nine yards. Not an account that’s regularly used by an individual, either – an account that’s purely reserved for that specific purpose and that alone, with critical notifications forwarded to people inside the organization that need to see them.

What Helen Keller got wrong.

To be perfectly fair, there’s not a lot to say here. The only thing I’d throw into the ring would be that – philosophically at least – there’s little value in accepting the “Security as superstition” maxim at face value. Yes, the broad strokes are accurate, but while the idea of safety is something that we’ve constructed with our meaty, inefficient animal brains we’ve also managed to create systems that are more capable of dealing in absolutes. Nobody is going to start declaring Public Key Infrastructure as the greatest invention since fire/the wheel/sliced bread etc, but the fact remains that we live in a world where danger is starting to run on diminishing returns. You can narrow the risks – slice them into thinner swathes than ever before – because now we have better, more finite, stronger tools that we can use to protect ourselves.

These are – as has become abundantly clear over the last twelve months or so – Unprecedented Times. While we’re trotting out tired platitudes I’ll throw “the world is getting smaller” into the ring, because that and the unprecedented-times bit tie in pretty neatly; when we’re able to communicate faster and more completely then our connections contract. They become less nuanced, more immediate, and far, far more polarizing – creating systems so vast that simple fixes are less likely to be attended to and more likely to be overlooked or misunderstood.

Earlier I wrote about Security as an abstract mental model – and I think that’s an important way to consider it. Models are – to my way of thinking – the primary way that we’re able to containerize the outside world and build frames of reference and connections that adequately map our personal constructs of our personal worlds to the reality we actually live in. Both people and organizations exist and integrate with each other by creating and maintaining those models of the world, and with rapid change those are models that have to be updated and checked and refitted on a continual basis – and this applies whether you’re considering correct personal pronoun usage or assessing organizational network weaknesses. The only ways to stay relevant are to be continuously reactive and adaptive in updating and maintaining those models, and attacks like the SolarWinds incident point to bad actors being similarly more determined and focussed.

At the end of the day, the responsibility for your data lies with you and you alone. It’s an uncomfortable truth (after all, it’s much more fun to blame someone else when everything goes horribly wrong) so selecting the right tools and approaches to try to protect that data is something best done carefully and with considered understanding. Your model is never going to be perfect, but the sooner you can accept and internalize that then the sooner you can adopt a critical approach to remedying potential threats.